TECH NEWS – You no longer need to see amateur, simple solutions for voicing a person who may be deceased (or fictional) and replacing their mouth with someone else’s…

More frightening, however, is who are the people behind EMO: Emote Portrait Alive. The group, Institute for Intelligent Computing, is not dangerous in itself, but the fact that they did it as part of the Alibaba Group is. So it’s one of China’s biggest companies, apparently with a thousand connections to the Chinese Communist Party… but what the group has put together is still thought-provoking.

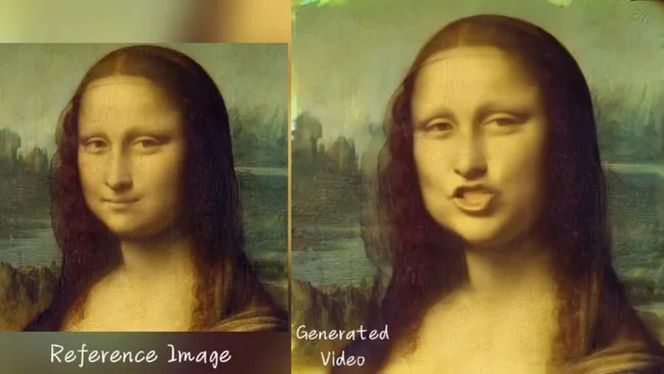

The tool takes a single reference image, extracts generated motion frames, and then combines them with vocal audio through a complex diffusion process: the facial region is integrated with multi-frame noise samples and then de-noised while adding generated images to synchronize with the audio, ultimately producing a video of the subject not only lip-syncing, but also emoting various facial expressions and head poses.

Several examples have been posted on Twitter. The one we liked best was the Mona Lisa. Everyone knows the painting. But the way she starts quoting Shakespeare is incredible. And she does it in decent quality. Human-looking facial expressions, mouth movements that look perfect, and the painted lady’s head itself is moving all the time, so it’s absolutely not sterile! Mona Lisa even blinks, although not everyone blinks that slowly, and that might be the only thing the developers could improve. Or maybe we could mention that at the 23rd second, the algorithm makes a little mistake above her left eyebrow (right eyebrow for her)…

So, the result is good, but it begs the question: when will this start to be used in a harmful way? Nowadays, deepfake videos are not uncommon, often involving the use of artificial intelligence by malicious users. Let’s just make sure we don’t see ourselves saying something we would never do.

2. Mona Lisa talking Shakespeare pic.twitter.com/26k29aAz1P

— Min Choi (@minchoi) February 28, 2024

Leave a Reply