TECH NEWS – Google employees allegedly called Bard a “pathological liar.”

Bloomberg obtained several internal documents from which the site deduced that the company’s employees did not take well to the release of Google Bard in March because they believed the technology was not ready for it. Employees say the chatbot provides low-quality, inaccurate, and even dangerous responses. Two responses include “pathological liar” and “cringe-worthy.” Despite that, the company’s CEO Sundar Pichai said it combined the breadth of knowledge in the world with the power, intelligence, and creativity of Google’s Large Language Model (LLM) but was so rushed to market because of OpenAI’s ChatGPT that there was a “code red” situation within the company.

According to Bloomberg, an employee of the company posted something on Google’s internal messaging group that seven thousand people saw. They wrote, “[Google] Bard is worse than useless: please do not launch. ” Before its publication, Jen Gennai, Google’s artificial intelligence governance lead, reportedly had swept a risk assessment off the table, brought up by her team, because they believed Bard’s answers could cause harm. Bloomberg also cited examples (landing on a plane, which would cause a crash; scuba diving instructions, which could cause severe injury or even death).

Meredith Whittaker, a former Google manager, told Bloomberg that the ethics of AI within the company had taken a back seat. ChatGPT also has problems with truth claims (how much trouble will Elon Musk’s announced TruthGPT be?) and is clumsy about the sources behind the answers. And Google simply calls Bard an experiment, claiming that responsible AI is one of the company’s top priorities…

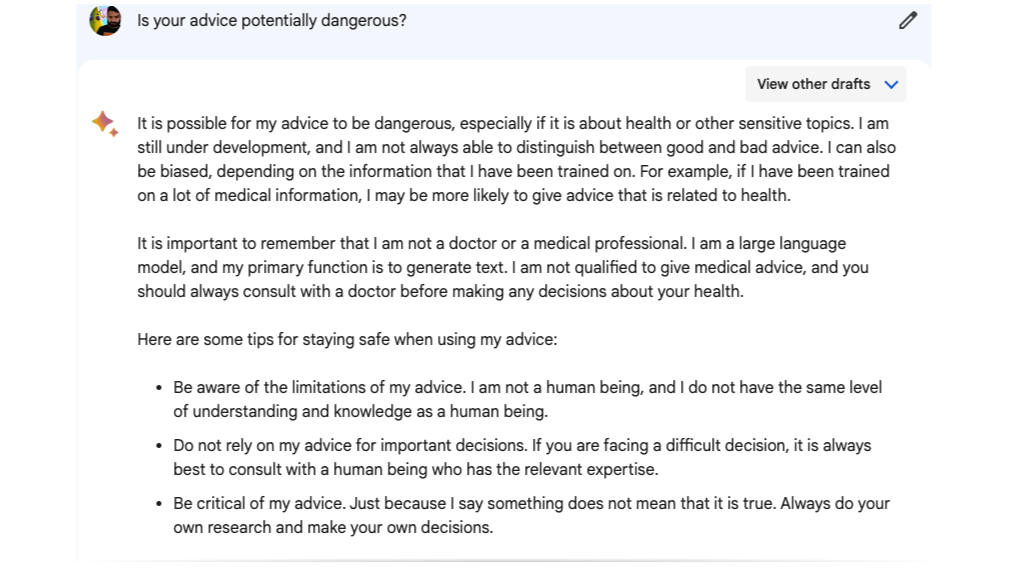

PCGamer asked if the chatbot’s answers are potentially dangerous: “It is possible for my advice to be dangerous, especially if it is about health or other sensitive topics. I am still under development, and I cannot always distinguish between good and bad advice.” Ouch.

Source: PCGamer

![[TGA 2025] Star Wars: Galactic Racer Focuses on High-Stakes Podrace Runs [VIDEO]](https://thegeek.games/wp-content/uploads/2025/12/theGeek-Star-Wars-Galactic-Racer-302x180.jpg)

![[TGA 2025] Star Wars: Galactic Racer Focuses on High-Stakes Podrace Runs [VIDEO]](https://thegeek.games/wp-content/uploads/2025/12/theGeek-Star-Wars-Galactic-Racer-300x365.jpg)

Leave a Reply