TECH NEWS – By the end of the year, OpenAI’s top Large Language Model (LLM) looks like it’s going to slow down, according to statistics.

According to OpenAI, GPT-4 Turbo can perform multiple complex tasks in a single query thanks to its exhaustive training process. It can process 128,000 tokens in its larger token merge window. As a reminder, 1000 tokens is about 750 words, so this LLM can process an input of about 96,000 words at a time, which is not considered short.

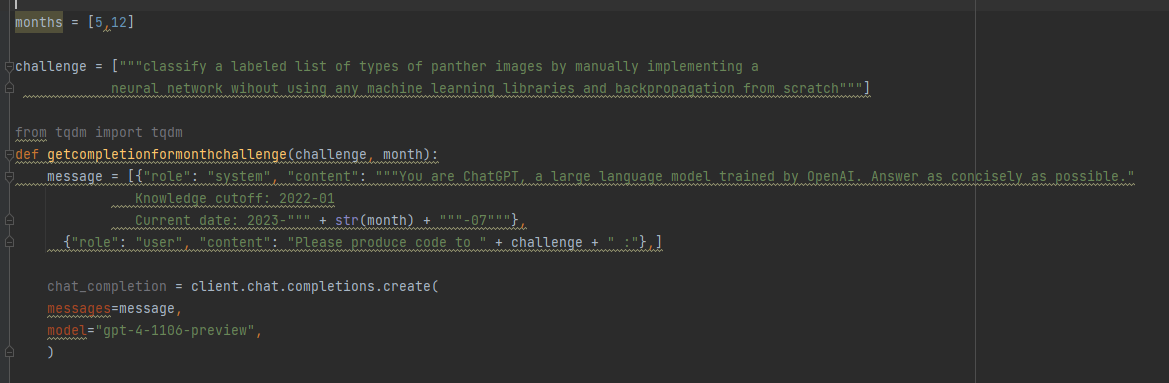

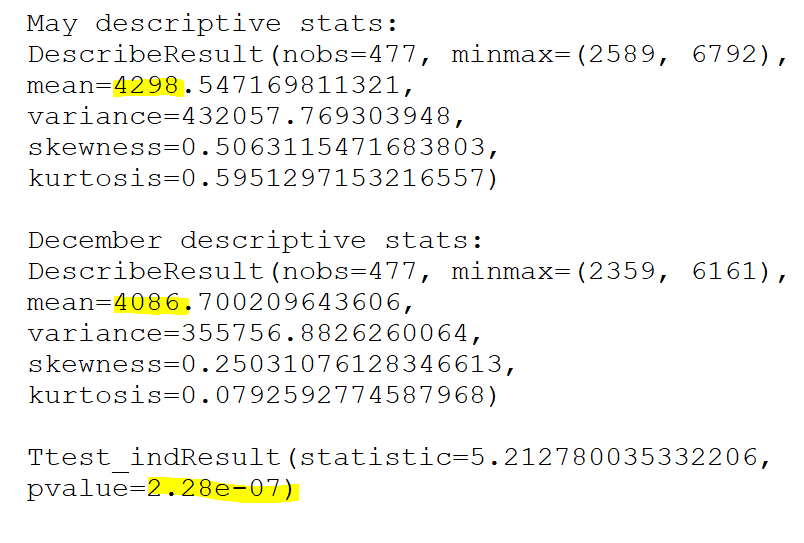

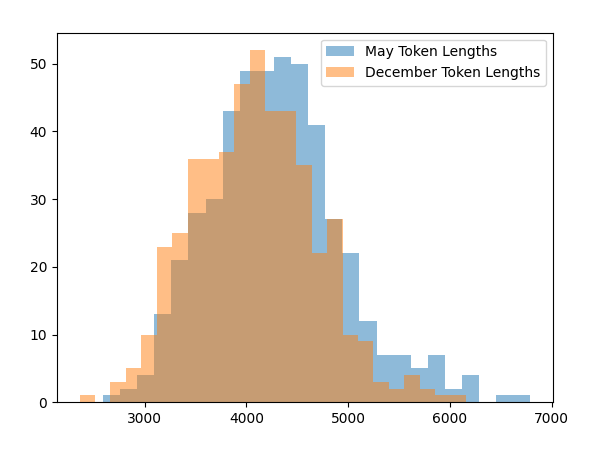

Rob Lynch wrote on Twitter: “A wild result! GPT-4 Turbo via the API produces (statistically significant) shorter completions when it “thinks” it’s December vs. when it thinks it’s May (as determined by the date in the system prompt). I took the exact same prompt about the API (a code completion task asking to implement a machine learning task without libraries). I created two system prompts, one that told the API it was May and another that it was December, and then compared the distributions. For the May system prompt, mean = 4298, for the December system prompt, mean = 4086. N = 477 completions in each sample from May and December. t-test p < 2.28e-07 – to reproduce this you can just vary the date number in the system message. Would like to see if this reproduces for others”.

This means that LLM produces a shorter answer when it thinks it’s December than when we tell it it’s May. The reduction in productivity is about 5%. And Wharton professor Ethan Millick made the same point: “Oh my God, the AI winter break hypothesis might actually be true? There has been some idle speculation that GPT-4 might perform worse in December because it “learned” to work less over the holidays. Here is a statistically significant test showing that this may be true. LLMs are weird.

We recently heard that OpenAI’s GPT model is getting lazy, so that it doesn’t always give full answers to queries, but “cuts off” some of the time. Some anecdotes suggest that users have been pretending to be disabled so that LLM would still give them full answers in the end. The situation is so serious that OpenAI had to react, so they will have a hotfix to make this strange situation go away.

So the AI has learned to relax for December. Oh my…!

Source: WCCFTech

Leave a Reply