TECH NEWS – There were differences between Nvidia’s 2022, AMD’s 2023 and Intel’s 2024 premieres, and now Intel is working on something exciting.

Frame generation is offered by both Nvidia DLSS and AMD FSR, and now Intel is trying to do the same, but with a slight difference. Frame generation results in input lag, so it takes a little longer to, for example, click the left mouse button and fire your weapon. Intel, on the other hand, wants to overcome this situation with frame extrapolation. This technology is called GFFE (G-Buffer Free Frame Extrapolation).

AMD, Intel, and Nvidia all use the same approach: the GPU renders two frames in a row and then stores them in the graphics card’s VRAM instead of rendering them. Then, instead of rendering another frame, the GPU either runs a pair of compute shaders (AMD’s FSR solution) or an AI neural network (Nvidia DLSS, Intel XeSS) to analyze the two frames for changes and motion, and then uses this information to generate a frame that is then placed between the two previously rendered frames and displayed on our monitor. And it’s hard to see the errors because we see the affected frames for such a short time.

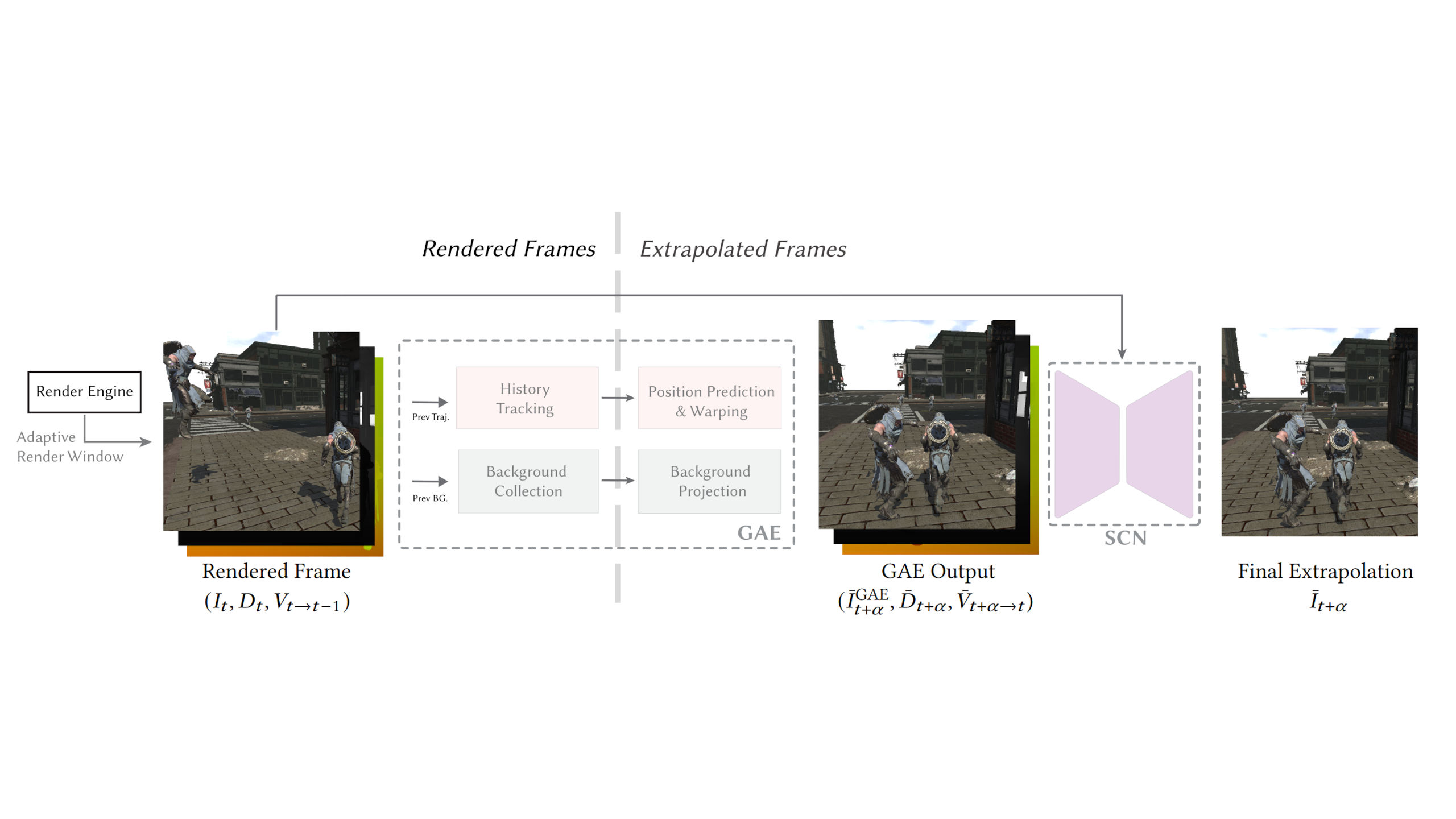

Game engines poll for input changes at fixed intervals and apply the changes to the next frame to be displayed. No such information is applied to the generated frames, and because two “normal” frames are held back to create the “artificial” frame, there is some delay between moving the cursor and seeing the movement on the screen. GFFE could be implemented at the driver level, rather than being integrated into the game’s rendering pipeline. There would also be little input lag due to unretained frames.

This is where frame extrapolation comes in. Instead of keeping rendered frames in a queue, the algorithm simply keeps the history of previously rendered frames and generates a new one from them. The system then simply adds the extrapolated frame after the “normal” frame, providing the necessary performance boost. GFFE is quite fast (6.6 milliseconds to generate a 1080p frame) and does not require access to the render engine’s motion or vector buffers, just the full frames.

As graphics cards begin to plateau in shader, cache, or memory bandwidth, solutions like this can and should be used to innovate.

Leave a Reply